tensorflow学习笔记(一)

时间:2023-08-07 18:37:00

tensorflow 的含义

tensor英语意思是张量,可以理解为任意维矩阵,可以执行GPU计算的矩阵,tensorflow表示计算矩阵。

简单操作

import tensorflow as tf import numpy as np #检查tensorflow版本 tf.__version__ 2.8.0 x=[[1.]] m=tf.matmul(x,x)#将矩阵 a 乘以矩阵 b,生成a * b print(m) tf.Tensor([[1.]], shape=(1, 1), dtype=float32) x=tf.constant([[1,9],[3,6]]) tf.Tensor( [[1 9] [3 6]], shape=(2, 2), dtype=int32) x=tf.add(x,1) tf.Tensor( [[ 2 10] [ 4 7]], shape=(2, 2), dtype=int32) 转换格式

x.numpy()

[[ 2 10]

[ 4 7]]

x=tf.cast(x,tf.float32)#格式转换为浮点

tf.Tensor(

[[ 2. 10.]

[ 4. 7.]], shape=(2, 2), dtype=float32)

回归问题预测

在tensorflow2中将大量使用keras的简介建模方法

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.python.keras import layers

import tensorflow.python.keras

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

使用的是一个温度的数据集来进行实验

features = pd.read_csv('temps0.csv')

print(features.head())

year month day week temp_2 temp_1 average friend

0 2016 1 1 Fri 45 45 45.6 29

1 2016 1 2 Sat 44 45 45.7 61

2 2016 1 3 Sun 45 44 45.8 56

3 2016 1 4 Mon 44 41 45.9 53

4 2016 1 5 Tues 41 40 46.0 41

print("数据维度",features.shape)

数据维度 (348, 8)

处理时间数据

import datetime

years=features['year']

months=features['month']

days=features['day']

#datatime格式

dates=[str(int(year))+'-'+str(int(month))+'-'+str(int(day)) for year ,month ,day in zip(years,months,days)]

dates=[datetime.datetime.strptime(date,'%Y-%m-%d')for date in dates]

print(dates[:5])

[datetime.datetime(2016, 1, 1, 0, 0), datetime.datetime(2016, 1, 2, 0, 0), datetime.datetime(2016, 1, 3, 0, 0), datetime.datetime(2016, 1, 4, 0, 0), datetime.datetime(2016, 1, 5, 0, 0)]

准备画图,再次之间要进行数据处理,删除异常数据,清洗数据等。

#将所有信息转为数字信息 features=pd.get_dummies(features) print(features.head(5)) year month day temp_2 ... week_Sun week_Thurs week_Tues week_Wed 0 2016 1 1 45 ... 0 0 0 0 1 2016 1 2 44 ... 0 0 0 0 2 2016 1 3 45 ... 1 0 0 0 3 2016 1 4 44 ... 0 0 0 0 4 2016 1 5 41 ... 0 0 1 0 [5 rows x 14 columns] #标签值 #去除特征中的标签 features=features.drop('actual',axis=1) #名字单独保存一下, features_list=list(features.columns) #转换成合适的格式 features=np.array(features) print(features.shape) (348, 14)

进行归一化操作

from sklearn import preprocessing

input_features=preprocessing.StandardScaler().fit_transform(features)

print(input_features[0])

基于Keras构建网络模型

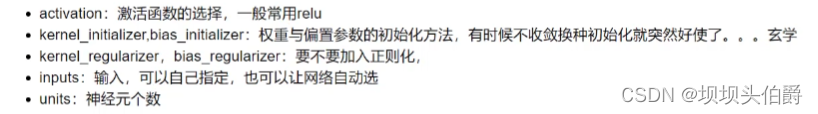

一些常用的参数

model=tf.keras.Sequential()

model.add(layers.Dense(16))#全连接层

model.add(layers.Dense(32))

model.add(layers.Dense(1))

compile相当于对网络进行配置,指定好优化器和损失函数等

model.compile(optimizer=tf.keras.optimizers.SGD(0.001),loss='mean_squared_error')

model.fit(input_features,labels,validation_split=0.25,epochs=10,batch_size=64)

Epoch 1/10

5/5 [==============================] - 2s 44ms/step - loss: 4202.6343 - val_loss: 3279.2751

Epoch 2/10

5/5 [==============================] - 0s 10ms/step - loss: 1709.3342 - val_loss: 4615.9727

Epoch 3/10

5/5 [==============================] - 0s 9ms/step - loss: 225.1389 - val_loss: 3343.1311

Epoch 4/10

5/5 [==============================] - 0s 9ms/step - loss: 105.3543 - val_loss: 2275.7815

Epoch 5/10

5/5 [==============================] - 0s 9ms/step - loss: 101.7793 - val_loss: 1845.8273

Epoch 6/10

5/5 [==============================] - 0s 9ms/step - loss: 75.1766 - val_loss: 1353.8687

Epoch 7/10

5/5 [==============================] - 0s 9ms/step - loss: 113.6695 - val_loss: 1052.7235

Epoch 8/10

5/5 [==============================] - 0s 10ms/step - loss: 63.4713 - val_loss: 779.7868

Epoch 9/10

5/5 [==============================] - 0s 10ms/step - loss: 57.4578 - val_loss: 649.0630

Epoch 10/10

5/5 [==============================] - 0s 10ms/step - loss: 39.8692 - val_loss: 638.9788

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

module_wrapper (ModuleWrapp (None, 16) 240

er)

module_wrapper_1 (ModuleWra (None, 32) 544

pper)

module_wrapper_2 (ModuleWra (None, 1) 33

pper)

=================================================================

Total params: 817

Trainable params: 817

Non-trainable params: 0

观察发现有点过拟合,更改初始化方法后

model.add(layers.Dense(16,kernel_initializer='random_normal'))

model.add(layers.Dense(32,kernel_initializer='random_normal'))

model.add(layers.Dense(1,kernel_initializer='random_normal'))

Epoch 1/10

5/5 [==============================] - 1s 39ms/step - loss: 4390.7549 - val_loss: 2869.1125

Epoch 2/10

5/5 [==============================] - 0s 10ms/step - loss: 4295.9175 - val_loss: 2789.7224

Epoch 3/10

5/5 [==============================] - 0s 11ms/step - loss: 4186.6431 - val_loss: 2676.1052

Epoch 4/10

5/5 [==============================] - 0s 10ms/step - loss: 3986.0823 - val_loss: 2471.7280

Epoch 5/10

5/5 [==============================] - 0s 11ms/step - loss: 3223.1704 - val_loss: 2771.4094

Epoch 6/10

5/5 [==============================] - 0s 10ms/step - loss: 387.2784 - val_loss: 1830.3267

Epoch 7/10

5/5 [==============================] - 0s 11ms/step - loss: 65.9947 - val_loss: 1299.6189

Epoch 8/10

5/5 [==============================] - 0s 11ms/step - loss: 67.4968 - val_loss: 1037.1642

Epoch 9/10

5/5 [==============================] - 0s 10ms/step - loss: 44.5196 - val_loss: 702.2187

Epoch 10/10

5/5 [==============================] - 0s 10ms/step - loss: 45.0128 - val_loss: 681.6292

当我们加入惩罚项

model.add(layers.Dense(16,kernel_initializer='random_normal',kernel_regularizer=tf.keras.regularizers.l2(0.03))) model.add(layers.Dense(32,kernel_initializer='random_normal',kernel_regularizer=tf.keras.regularizers.l2(0.03))) model.add(layers.Dense(1,kernel_initializer='random_normal',kernel_regularizer=tf.keras.regularizers.l2(0.03))) Epoch 1/10 5/5 [==============================] - 1s 37ms/step - loss: 4388.7852 - val_loss: 2865.3335 Epoch 2/10 5/5 [==============================] - 0s 10ms/step - loss: 4283.2266 - val_loss: 2770.2195 Epoch 3/10 5/5 [==============================] - 0s 10ms/step - loss: 4119.2578 - val_loss: 2610.3687 Epoch 4/10 5/5 [==============================] - 0s 10ms/step - loss: 3604.5222 - val_loss: 2832.3276 Epoch 5/10 5/5 [==============================] - 0s 10ms/step - loss: 646.9486 - val_loss: 1566.8936 Epoch 6/10 5/5 [==============================] - 0s 10ms/step - loss: 65.9607 - val_loss: 1254.3547 Epoch 7