【Docker Swarm】搭建Docker Swarm高可用集群(详细版)

时间:2022-11-30 07:30:00

文章目录

-

- 1??什么是Docker Swarm?

- 2??Docker Swarm相关命令说明

- 3??Docker Swarm集群节点规划

- 4??创建Docker Swarm高可用集群操作步骤演示

-

- ?? 环境准备

- ?? 创建集群

-

- ?? docker-m1配置信息(manager)

- ?? docker-m2配置信息(manager)

- ?? docker-m3配置信息(manager)

- ?? docker-n1配置信息(worker)

- ?? docker-n1配置信息(worker)

- ??检查配置

- 改变角色:将Manager降级为Worker

- 变更角色:将Worker晋升为Manager

- 7.移除并添加管理节点

- 移除并添加工作节点

- 9??在集群中部署NGINX应用测试

-

- ?? 查看service帮助命令

- ?? 创建NGINX服务

- ?? 查看NGINX服务

- ?? 创建多个NGINX服务副本

- ?? 模拟故障

- ??参 考 链 接

1??什么是Docker Swarm?

- Docker Swarm 是什么?

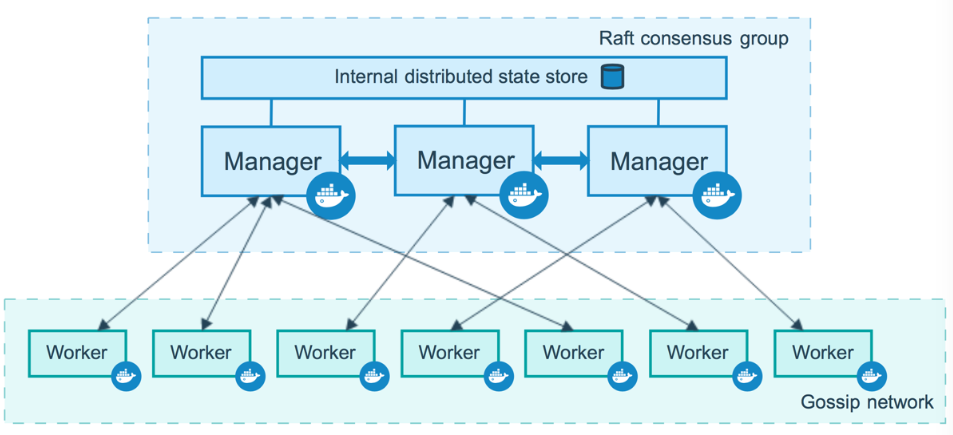

Docker Swarm 是 Docker 集群管理工具 Docker 主机抽象成一个整体,通过入口统一管理 Docker 各种主机 Docker 资源。Docker Swarm 一个或多个 Docker 节点组织起来,使得用户能够以集群方式管理它们。

- Docker Swarm 组成部分

swarm 集群由

管理节点(Manager)和工作节点(Worker)构成。管理节点:主要负责整个集群的管理,包括集群配置、服务管理等与集群相关的所有工作。如监控集群状态,将任务分配到工作节点。

工作节点:主要负责操作服务的执行。

2??Docker Swarm相关命令说明

??docker swarm |Docker 文档

# 查看docker swarm有哪些命令 [root@docker01 ~]# docker swarm Usage: docker swarm COMMAND Manage Swarm Commands: ca Display and rotate the root CA init Initialize a swarm join Join a swarm as a node and/or manager join-token Manage join tokens leave Leave the swarm unlock Unlock swarm unlock-key Manage the unlock key update Update the swarm Run 'docker swarm COMMAND --help' for more information on a command. # 查看docker swarm init初始化集群命令的具体操作 [root@docker01 ~]# docker swarm init --help Usage: docker swarm init [OPTIONS] Initialize a swarm Options: --advertise-addr string Advertised address (format: <ip|interface>[:port]) --autolock Enable manager autolocking (requiring an unlock key to start a stopped manager) --availability string Availability of the node ("active"|"pause"|"drain") (default "active") --cert-expiry duration Validity period for node certificates (ns|us|ms|s|m|h) (default 2160h0m0s)

--data-path-addr string Address or interface to use for data path traffic (format: <ip|interface>)

--data-path-port uint32 Port number to use for data path traffic (1024 - 49151). If no value is set or is set to 0, the default port (4789) is used.

--default-addr-pool ipNetSlice default address pool in CIDR format (default [])

--default-addr-pool-mask-length uint32 default address pool subnet mask length (default 24)

--dispatcher-heartbeat duration Dispatcher heartbeat period (ns|us|ms|s|m|h) (default 5s)

--external-ca external-ca Specifications of one or more certificate signing endpoints

--force-new-cluster Force create a new cluster from current state

--listen-addr node-addr Listen address (format: <ip|interface>[:port]) (default 0.0.0.0:2377)

--max-snapshots uint Number of additional Raft snapshots to retain

--snapshot-interval uint Number of log entries between Raft snapshots (default 10000)

--task-history-limit int Task history retention limit (default 5)

3️⃣Docker Swarm集群节点规划

为了利用 swarm 模式的容错功能,可以根据组织的高可用性要求实现

奇数个节点。当有多个管理中心时,可以从一个管理中心节点的故障中恢复,而无需停机。

三个管理器群可以容忍最多损失一个管理器。

五个管理器群最多可以同时丢失两个管理器节点。

七个管理器群最多可以同时丢失三个管理器节点。

九个管理器群最多可以同时丢失四个管理器节点。

Docker 建议一个群最多有七个管理器节点。

(添加更多管理器并不意味着可伸缩性或性能的提高。一般来说,情况恰恰相反。)

| 主机名 | IP地址 | docker版本号 | 角色 | 备注 |

|---|---|---|---|---|

| manager01 | 192.168.200.81 | 20.10.14 | 管理节点 | 主管 |

| manager02 | 192.168.200.82 | 20.10.14 | 管理节点 | 从管 |

| manager03 | 192.168.200.83 | 20.10.14 | 管理节点 | 从管 |

| worker01 | 192.168.200.91 | 20.10.14 | 工作节点 | 工作 |

| worker02 | 192.168.200.92 | 20.10.14 | 工作节点 | 工作 |

4️⃣创建Docker Swarm高可用集群操作步骤演示

两步走操作

- ① 生成主节点init

- ② 加入(manager、worker)节点

📃 环境准备

- 1、修改主机名

- 2、配置IP地址

- 3、关闭防火墙和SELINUX安全模式

- 4、配置系统YUM源和Docker镜像源

- 5、更新系统(yum update -y)

- 6、安装好docker环境

# 查看docker版本号

[root@docker-m1 ~]# docker -v

Docker version 20.10.14, build a224086

# docker环境安装好,默认的网络信息。

[root@docker ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

a656864d027c bridge bridge local

9fd62dbfb07f host host local

27700772b8f7 none null local

🎦 创建集群

$ docker swarm init --advertise-addr

🍎 docker-m1配置信息(manager)

# 创建新的群,生成主节点,执行如下命令,将工作节点添加到集群中。

[root@docker-m1 ~]# docker swarm init --advertise-addr 192.168.200.81

Swarm initialized: current node (34cug51p9dw83u2np594z6ej4) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-3ixu6we70ghk69wghfrmo0y6a 192.168.200.81:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

[root@docker-m1 ~]#

# 执行以下命令,生成如下命令,运行此命令,添加新的管理节点到集群中。

[root@docker-m1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-1z6k8msio37as0vaa467glefx 192.168.200.81:2377

[root@docker-m1 ~]#

🍑 docker-m2配置信息(manager)

# 添加至集群中成为管理节点

[root@docker-m2 ~]# docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-1z6k8msio37as0vaa467glefx 192.168.200.81:2377

This node joined a swarm as a manager.

🍊 docker-m3配置信息(manager)

# 添加至集群中成为管理节点

[root@docker-m3 ~]# docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-1z6k8msio37as0vaa467glefx 192.168.200.81:2377

This node joined a swarm as a manager.

🍌 docker-n1配置信息(worker)

# 添加至集群中成为工作节点

[root@docker-n1 ~]# docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-3ixu6we70ghk69wghfrmo0y6a 192.168.200.81:2377

This node joined a swarm as a worker.

🍐 docker-n1配置信息(worker)

# 添加至集群中成为工作节点

[root@docker-n2 ~]# docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-3ixu6we70ghk69wghfrmo0y6a 192.168.200.81:2377

This node joined a swarm as a worker.

🔨检查配置情况

查看集群节点状态信息

发现docker-m1是主管理节点,docker-m2、docker-03是备用管理节点;

两个工作节点docker-n1、docker-n2也正常添加至集群中来。

[root@docker-m1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 * docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Ready Active Reachable 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

[root@docker-m1 ~]#

查看整个Docker系统的信息

发现docker swarm集群已经创建完成。

共有五台节点,其中三台为管理节点。

[root@docker-m1 ~]# docker info

Client:

Context: default

Debug Mode: false

Plugins:

app: Docker App (Docker Inc., v0.9.1-beta3)

buildx: Docker Buildx (Docker Inc., v0.8.1-docker)

scan: Docker Scan (Docker Inc., v0.17.0)

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 20.10.14

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: active

NodeID: 34cug51p9dw83u2np594z6ej4

Is Manager: true

ClusterID: v1r77dlrbucscss3tss6edpfv

Managers: 3

Nodes: 5

Default Address Pool: 10.0.0.0/8

SubnetSize: 24

Data Path Port: 4789

Orchestration:

Task History Retention Limit: 5

Raft:

Snapshot Interval: 10000

Number of Old Snapshots to Retain: 0

Heartbeat Tick: 1

Election Tick: 10

Dispatcher:

Heartbeat Period: 5 seconds

CA Configuration:

Expiry Duration: 3 months

Force Rotate: 0

Autolock Managers: false

Root Rotation In Progress: false

Node Address: 192.168.200.81

Manager Addresses:

192.168.200.81:2377

192.168.200.82:2377

192.168.200.83:2377

Runtimes: io.containerd.runc.v2 io.containerd.runtime.v1.linux runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 3df54a852345ae127d1fa3092b95168e4a88e2f8

runc version: v1.0.3-0-gf46b6ba

init version: de40ad0

Security Options:

seccomp

Profile: default

Kernel Version: 3.10.0-1160.62.1.el7.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 1.934GiB

Name: docker-m1

ID: YIQB:NBLI:MUUN:35IY:ESCK:QPI3:CIZP:U2AS:WV7D:E57G:H7CO:WBWI

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Registry Mirrors:

https://w2kavmmf.mirror.aliyuncs.com/

Live Restore Enabled: false

WARNING: IPv4 forwarding is disabled

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

[root@docker-m1 ~]#

查询集群网络信息

查看到集群中各个节点的IP地址

[root@docker-m1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

a656864d027c bridge bridge local

1359459aa236 docker_gwbridge bridge local

9fd62dbfb07f host host local

6ipkh8htdyiv ingress overlay swarm

27700772b8f7 none null local

[root@docker-m1 ~]# docker network inspect 6ipkh8htdyiv

[

{

"Name": "ingress",

"Id": "6ipkh8htdyivqfqwcdcehu8mb",

"Created": "2022-05-03T18:51:39.108622642+08:00",

"Scope": "swarm",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "10.0.0.0/24",

"Gateway": "10.0.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": true,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"ingress-sbox": {

"Name": "ingress-endpoint",

"EndpointID": "aaa7e77674405f75c1ef8ecf563a5e1745778e9fa698863a243d32121c58dcc5",

"MacAddress": "02:42:0a:00:00:02",

"IPv4Address": "10.0.0.2/24",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.driver.overlay.vxlanid_list": "4096"

},

"Labels": {

},

"Peers": [

{

"Name": "052c54656ba2",

"IP": "192.168.200.81"

},

{

"Name": "e9e6959ea728",

"IP": "192.168.200.82"

},

{

"Name": "08a7107b1250",

"IP": "192.168.200.83"

},

{

"Name": "b0e6bcd74c9f",

"IP": "192.168.200.91"

},

{

"Name": "0d537d72fb87",

"IP": "192.168.200.92"

}

]

}

]

[root@docker-m1 ~]#

5️⃣更改角色:将Manager降级为Worker

以docker-m3为例,将docker-m3管理节点由

manager角色变成worker角色。

# 查看帮助命令

[root@docker-m1 ~]# docker node update -h

Flag shorthand -h has been deprecated, please use --help

Usage: docker node update [OPTIONS] NODE

Update a node

Options:

--availability string Availability of the node ("active"|"pause"|"drain")

--label-add list Add or update a node label (key=value)

--label-rm list Remove a node label if exists

--role string Role of the node ("worker"|"manager")

# 执行如下命令,将docker-m3管理节点由manager角色变成worker角色。

[root@docker-m1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 * docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Ready Active Reachable 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

[root@docker-m1 ~]# docker node update --role worker docker-m3

docker-m3

[root@docker-m1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 * docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Ready Active 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

[root@docker-m1 ~]#

# 更改之后,查看docker-m3节点详细信息情况

# 发现已经由管理节点变成工作节点

[root@docker-m1 ~]# docker node inspect 4q34guc6hp2a5ok0g1zkjojyh

[

{

"ID": "4q34guc6hp2a5ok0g1zkjojyh",

"Version": {

"Index": 39

},

"CreatedAt": "2022-05-03T10:59:07.69499678Z",

"UpdatedAt": "2022-05-03T11:27:02.178601504Z",

"Spec": {

"Labels": {

},

"Role": "worker",

"Availability": "active"

},

"Description": {

"Hostname": "docker-m3",

"Platform": {

"Architecture": "x86_64",

"OS": "linux"

},

"Resources": {

"NanoCPUs": 1000000000,

"MemoryBytes": 2076499968

},

"Engine": {

"EngineVersion": "20.10.14",

"Plugins": [

{

"Type": "Log",

"Name": "awslogs"

},

{

"Type": "Log",

"Name": "fluentd"

},

{

"Type": "Log",

"Name": "gcplogs"

},

{

"Type": "Log",

"Name": "gelf"

},

{

"Type": "Log",

"Name": "journald"

},

{

"Type": "Log",

"Name": "json-file"

},

{

"Type": "Log",

"Name": "local"

},

{

"Type": "Log",

"Name": "logentries"

},

{

"Type": "Log",

"Name": "splunk"

},

{

"Type": "Log",

"Name": "syslog"

},

{

"Type": "Network",

"Name": "bridge"

},

{

"Type": "Network",

"Name": "host"

},

{

"Type": "Network",

"Name": "ipvlan"

},

{

"Type": "Network",

"Name": "macvlan"

},

{

"Type": "Network",

"Name": "null"

},

{

"Type": "Network",

"Name": "overlay"

},

{

"Type": "Volume",

"Name": "local"

}

]

},

"TLSInfo": {

"TrustRoot": "-----BEGIN CERTIFICATE-----\nMIIBaTCCARCgAwIBAgIUYUzIe4mqhjKYxuilbhVByLwzzeMwCgYIKoZIzj0EAwIw\nEzERMA8GA1UEAxMIc3dhcm0tY2EwHhcNMjIwNTAzMTA0NzAwWhcNNDIwNDI4MTA0\nNzAwWjATMREwDwYDVQQDEwhzd2FybS1jYTBZMBMGByqGSM49AgEGCCqGSM49AwEH\nA0IABK8XzVHRM50TgsAxrgXg18ti69dkedf9LsaHm2I2ub9kKzkLsnTV+bIHGOHK\n0/Twi/B9OCFSsozUGDP7qR3/rRmjQjBAMA4GA1UdDwEB/wQEAwIBBjAPBgNVHRMB\nAf8EBTADAQH/MB0GA1UdDgQWBBQ3iXSq5FKnODK2Qqic39A0bg9qjjAKBggqhkjO\nPQQDAgNHADBEAiASv1HdziErIzBJtsVMxfp8zAv0EJ5/eVeIldYdUIVNTQIgXUc3\nakty/iBy5/MhFt9JRRMV1xH1x+Dcf35tNWGH52w=\n-----END CERTIFICATE-----\n",

"CertIssuerSubject": "MBMxETAPBgNVBAMTCHN3YXJtLWNh",

"CertIssuerPublicKey": "MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAErxfNUdEznROCwDGuBeDXy2Lr12R51/0uxoebYja5v2QrOQuydNX5sgcY4crT9PCL8H04IVKyjNQYM/upHf+tGQ=="

}

},

"Status": {

"State": "ready",

"Addr": "192.168.200.83"

}

}

]

[root@docker-m1 ~]#

6️⃣更改角色:将Worker晋升为Manager

以docker-n2为例,将docker-n2管理节点由

worker角色变成manager角色。

[root@docker-m1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 * docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Ready Active 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

[root@docker-m1 ~]# docker node update --role manager docker-n2

docker-n2

[root@docker-m1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 * docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Ready Active 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active Reachable 20.10.14

7️⃣移除再添加管理节点

将集群中某台管理节点移除集群,重新获取

管理节点的令牌,再添加至集群中。

# 查看帮助命令

[root@docker-m1 ~]# docker swarm leave --help

Usage: docker swarm leave [OPTIONS]

Leave the swarm

Options:

-f, --force Force this node to leave the swarm, ignoring warnings

[root@docker-m1 ~]#

在docker-m3节点执行操作,将docker-m3管理节点移除集群

[root@docker-m3 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh * docker-m3 Ready Active Reachable 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

[root@docker-m3 ~]# docker swarm leave -f

Node left the swarm.

在docker-m1管理节点上查看。发现docker-m3管理节点已经关闭

[root@docker-m1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 * docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Ready Active Reachable 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

[root@docker-m1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 * docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Down Active Unreachable 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

重新获取添加管理节点的令牌命令。

执行

docker swarm join-token manager命令,获取命令。

[root@docker-m1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-1z6k8msio37as0vaa467glefx 192.168.200.81:2377

[root@docker-m1 ~]#

重新将docker-m3管理节点添加到集群中。

[root@docker-m3 ~]# docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-1z6k8msio37as0vaa467glefx 192.168.200.81:2377

This node joined a swarm as a manager.

[root@docker-m3 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Down Active Reachable 20.10.14

jvtiwv8eu45ev4qbm0ausivv2 * docker-m3 Ready Active Reachable 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Ready Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

[root@docker-m3 ~]#

8️⃣移除再添加工作节点

将集群中某台工作节点移除集群,重新获取

工作节点的令牌,再添加至集群中。

在docker-n1节点执行操作,将docker-n1工作节点移除集群

[root@docker-n1 ~]# docker swarm leave

Node left the swarm.

在docker-m1管理节点上查看。发现docker-n1工作节点已经关闭

[root@docker-m1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

34cug51p9dw83u2np594z6ej4 * docker-m1 Ready Active Leader 20.10.14

hwmwdk78u3rx0wwxged87xnun docker-m2 Ready Active Reachable 20.10.14

4q34guc6hp2a5ok0g1zkjojyh docker-m3 Down Active Reachable 20.10.14

jvtiwv8eu45ev4qbm0ausivv2 docker-m3 Ready Active Reachable 20.10.14

4om9sg56sg09t9whelbrkh8qn docker-n1 Down Active 20.10.14

xooolkg0g9epddfqqiicywshe docker-n2 Ready Active 20.10.14

重新获取添加工作节点的令牌命令。

执行

docker swarm join-token worker命令,获取命令。

[root@docker-m1 ~]# docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-3ixu6we70ghk69wghfrmo0y6a 192.168.200.81:2377

[root@docker-m1 ~]#

重新将docker-n1工作节点添加到集群中。

[root@docker-n1 ~]# docker swarm join --token SWMTKN-1-528o8bfk061miheduvuvnnohhpystvxnwiqfqqf04gou6n1wmz-3ixu6we70ghk69wghfrmo0y6a 192.168.200.81:2377

This node joined a swarm as a worker.

删除多余的节点。

[root@docker-m1 ~]# docker node rm 34emdxnfc139d6kc4ht2xsp4b 4om9sg56sg09t9whelbrkh8qn

34emdxnfc139d6kc4ht2xsp4b

4om9sg56sg09t9whelbrkh8qn

[root@docker-m1 ~]#

9️⃣在集群中部署NGINX应用测试

🏉 查看service帮助命令

# 查看service 帮助命令

[root@docker-m1 ~]# docker service

Usage: docker service COMMAND

Manage services

Commands:

create Create a new service

inspect Display detailed information on one or more services

logs Fetch the logs of a service or task

ls List services

ps List the tasks of one or more services

rm Remove one or more services

rollback Revert changes to a service's configuration scale Scale one or multiple replicated services update Update a service Run 'docker service COMMAND --help' for more information on a command.

[root@docker-m1 ~]#

⚾️ 创建NGINX服务

# 1、搜索镜像

[root@docker-m1 ~]# docker search nginx

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

nginx Official build of Nginx. 16720 [OK]

bitnami/nginx Bitnami nginx Docker Image 124 [OK]

ubuntu/nginx Nginx, a high-performance reverse proxy & we… 46

bitnami/nginx-ingress-controller Bitnami Docker Image for NGINX Ingress Contr… 17 [OK]

rancher/nginx-ingress-controller 10

ibmcom/nginx-ingress-controller Docker Image for IBM Cloud Private-CE (Commu… 4

bitnami/nginx-ldap-auth-daemon 3

bitnami/nginx-exporter 2

rancher/nginx-ingress-controller-defaultbackend 2

circleci/nginx This image is for internal use 2

vmware/nginx 2

vmware/nginx-photon 1

bitnami/nginx-intel 1

rancher/nginx 1

wallarm/nginx-ingress-controller Kubernetes Ingress Controller with Wallarm e… 1

rancher/nginx-conf 0

rancher/nginx-ssl 0

ibmcom/nginx-ppc64le Docker image for nginx-ppc64le 0

rancher/nginx-ingress-controller-amd64 0

continuumio/nginx-ingress-ws 0

ibmcom/nginx-ingress-controller-ppc64le Docker Image for IBM Cloud Private-CE (Commu… 0

kasmweb/nginx An Nginx image based off nginx:alpine and in… 0

rancher/nginx-proxy 0

wallarm/nginx-ingress-controller-amd64 Kubernetes Ingress Controller with Wallarm e… 0

ibmcom/nginx-ingress-controller-amd64 0

[root@docker-m1 ~]#

# 2、下载镜像 pull

[root@docker-m1 ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

a2abf6c4d29d: Pull complete

a9edb18cadd1: Pull complete

589b7251471a: Pull complete

186b1aaa4aa6: Pull complete

b4df32aa5a72: Pull complete

a0bcbecc962e: Pull complete

Digest: sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

[root@docker-m1 ~]#

# 3、查看镜像

[root@docker-m1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 605c77e624dd 4 months ago 141MB

# 4、使用service命令启动Nginx

docker run 容器启动,不具有扩缩容器。

docker service 服务启动,具有扩缩容,滚动更新。

[root@docker-m1 ~]# docker service create -p 8888:80 --name xybdiy-nginx nginx

ngoi21hcjan5qoro9amd7n1jh

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@docker-m1 ~]#

🏀 查看NGINX服务

发现nginx服务被部署到了docker-n2 工作节点上。随机分布。

# 查看NGINX服务

[root@docker-m1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ngoi21hcjan5 xybdiy-nginx replicated 1/1 nginx:latest *:8888->80/tcp

[root@docker-m1 ~]# docker service ps xybdiy-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

w5azhbc3xrta xybdiy-nginx.1 nginx:latest docker-n2 Running Running 20 minutes ago

[root@docker-m1 ~]#

[root@docker-n2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d65e6e8bf5fd nginx:latest "/docker-entrypoint.…" 28 minutes ago Up 28 minutes 80/tcp xybdiy-nginx.1.w5azhbc3xrtafxvftkgh7x9vk

[root@docker-n2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d65e6e8bf5fd nginx:latest "/docker-entrypoint.…" 28 minutes ago Up 28 minutes 80/tcp xybdiy-nginx.1.w5azhbc3xrtafxvftkgh7x9vk

# 查看NGNIX服务详细信息

[root@docker-m1 ~]# docker service inspect xybdiy-nginx

[

{

"ID": "ngoi21hcjan5qoro9amd7n1jh",

"Version": {

"Index": 34

},

"CreatedAt": "2022-05-03T12:38:22.234486876Z",

"UpdatedAt": "2022-05-03T12:38:22.238903441Z",

"Spec": {

"Name": "xybdiy-nginx",

"Labels": {

},

"TaskTemplate": {

"ContainerSpec": {

"Image": "nginx:latest@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31",

"Init": false,

"StopGracePeriod": 10000000000,

"DNSConfig": {

},

"Isolation": "default"

},

"Resources": {

"Limits": {

},

"Reservations": {

}

},

"RestartPolicy": {

"Condition": "any",

"Delay": 5000000000,

"MaxAttempts": 0

},

"Placement": {

"Platforms": [

{

"Architecture": "amd64",

"OS": "linux"

},

{

"OS": "linux"

},

{

"OS": "linux"

},

{

"Architecture": "arm64",

"OS": "linux"

},

{

"Architecture": "386",

"OS": "linux"

},

{

"Architecture": "mips64le",

"OS": "linux"

},

{

"Architecture": "ppc64le",

"OS": "linux"

},

{

"Architecture": "s390x",

"OS": "linux"

}

]

},

"ForceUpdate": 0,

"Runtime": "container"

},

"Mode": {

"Replicated": {

"Replicas": 1

}

},

"UpdateConfig": {

"Parallelism": 1,

"FailureAction": "pause",

"Monitor": 5000000000,

"MaxFailureRatio": 0,

"Order": "stop-first"

},

"RollbackConfig": {

"Parallelism": 1,

"FailureAction": "pause",

"Monitor": 5000000000,

"MaxFailureRatio": 0,

"Order": "stop-first"

},

"EndpointSpec": {

"Mode": "vip",

"Ports": [

{

"Protocol": "tcp",

"TargetPort": 80,

"PublishedPort": 8888,

"PublishMode": "ingress"

}

]

}

},

"Endpoint": {

"Spec": {

"Mode": "vip",

"Ports": [

{

"Protocol": "tcp",

"TargetPort": 80,

"PublishedPort": 8888,

"PublishMode": "ingress"

}

]

},

"Ports": [

{

"Protocol": "tcp",

"TargetPort": 80,

"PublishedPort": 8888,

"PublishMode": "ingress"

}

],

"VirtualIPs": [

{

"NetworkID": "uhjulzndxnofx63e2bb3r8iq9",

"Addr": "10.0.0.7/24"

}

]

}

}

]

[root@docker-m1 ~]#

🏈 创建多个NGINX服务副本

动态扩容,缓解主机被访问的压力。

查看update帮助命令

[root@docker-m1 ~]# docker service update --help

Usage: docker service update [OPTIONS] SERVICE

Update a service

Options:

......

-q, --quiet Suppress progress output

--read-only Mount the container's root filesystem as read only

--replicas uint Number of tasks

--replicas-max-per-node uint Maximum number of tasks per node (default 0 = unlimited)

......

创建多个NGINX服务副本

[root@docker-m1 ~]# docker service update --replicas 2 xybdiy-nginx

xybdiy-nginx

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged

[root@docker-m1 ~]#

查看创建的NGINX服务副本

[root@docker-m1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ngoi21hcjan5 xybdiy-nginx replicated 2/2 nginx:latest *:8888->80/tcp

[root@docker-m1 ~]# docker service ps xybdiy-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

w5azhbc3xrta xybdiy-nginx.1 nginx:latest docker-n2 Running Running 36 minutes ago

rgtjq163z9ch xybdiy-nginx.2 nginx:latest docker-m1 Running Running 33 seconds ago

测试访问NGINX服务

http://192.168.200.81:8888/

http://192.168.200.91:8888/

🏐 模拟故障情况

当docker-m1管理主机发生宕机时,查看NGINX服务是否能够正常运行访问。

# 关闭docker-m1节点

[root@docker-m1 ~]# shutdown -h now

Connection to 192.168.200.81 closed by remote host.

Connection to 192.168.200.81 closed.

查看节点状态

[root@docker-m2 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

75dxq2qmzr2bv4tkg20gh0syr docker-m1 Down Active Unreachable 20.10.14

l2is4spmgd4b5xmmxwo3jvuf4 * docker-m2 Ready Active Reachable 20.10.14

u89a2ie2buxuc5bsew4a2wrpo docker-m3 Ready Active Leader 20.10.14

aon2nakgk87rds5pque74itw4 docker-n1 Ready Active 20.10.14

ljdb9d3xkzjruuxsxrpmuei7s docker-n2 Ready Active 20.10.14

[root@docker-m2 ~]#

查看服务状态

[root@docker-m2 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ngoi21hcjan5 xybdiy-nginx replicated 3/2 nginx:latest *:8888->80/tcp

[root@docker-m2 ~]# docker service ps xybdiy-nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

w5azhbc3xrta xybdiy-nginx.1 nginx:latest docker-n2 Running Running 2 minutes ago

tteb16dnir6u xybdiy-nginx.2 nginx:latest docker-n1 Running Running 2 minutes ago

rgtjq163z9ch \_ xybdiy-nginx.2 nginx:latest docker-m1 Shutdown Running 17 minutes ago

[root@docker-m2 ~]#

🔟参 考 链 接

🔴群模式概述 |Docker 文档

🟠群模式入门|Docker 文档

🟡群模式关键概念|Docker 文档

🟢节点如何|Docker 文档

🔵创建群|Docker 文档

🟣docker node ls |Docker 文档