车道线检测2022新工作整理,2D、3D都有

时间:2023-09-23 05:07:02

车道线检测是自动驾驶的基础和重要任务。学术和工业界一直在投入大量工作。小唐一直对车道线检测任务感兴趣,并在公司开发了相关功能。我还分享了一些相关文章:

相关链接(点击进入):

车道线检测综述及近期新工作

https://blog.csdn.net/qq_41590635/article/details/117386286

新的车道线检测工作VIL-100: A New Dataset and A Baseline Model for Video Instance Lane Detection ICCV2021

https://blog.csdn.net/qq_41590635/article/details/120335328

CVPR2022车道线检测Efficient Lane Detection via Curve Modeling

https://blog.csdn.net/qq_41590635/article/details/124144880?spm=1001.2014.3001.5501

CVPR2022车道线检测SOTA工作CLRNet在Tusimple数据集测试demo,帮助自动驾驶早日落地

https://blog.csdn.net/qq_41590635/article/details/124903793

端到端多任务感知网络HybridNet,性能优于YOLOP

https://blog.csdn.net/qq_41590635/article/details/125070914

新的车道线检测SOTA CLRNet: Cross Layer Refinement Network for Lane Detection CVPR2022

https://blog.csdn.net/qq_41590635/article/details/125136528

两天前,在自动驾驶技术交流小组的四组中,一群朋友问最近的车道线有什么新工作,所以汤整理了最近的相关工作,并与相关方向的朋友分享。也欢迎对车道线路测试、停车位测试、目标测试、深度估计等相关任务感兴趣的同行和朋友加入技术交流小组,共同讨论和交流。

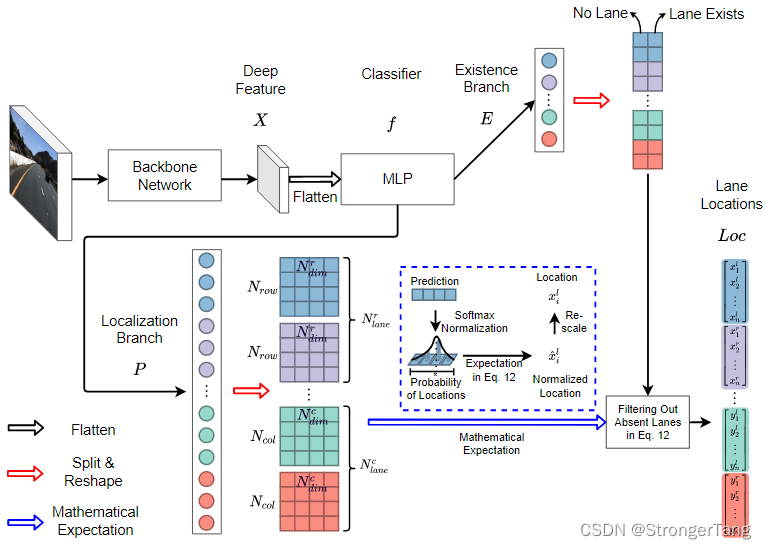

- Ultra Fast Deep Lane Detection with Hybrid Anchor Driven Ordinal Classification(UFLDv2)

发表(录用):TPAMI 2022

单位:浙江大学

论文:https://arxiv.org/abs/2206.07389

代码:https://github.com/cfzd/Ultra-Fast-Lane-Detection-v2

效果demo:

- Rethinking Efficient Lane Detection via Curve Modeling

发表(录用):CVPR 2022

单位:上海交通大学、华东师范大学、香港城市大学、商汤

论文:https://arxiv.org/abs/2203.02431

代码:https://github.com/voldemortX/pytorch-auto-drive

论文解读及效果demo:(点击进入)

CVPR2022车道线检测Efficient Lane Detection via Curve Modeling

https://blog.csdn.net/qq_41590635/article/details/124144880?spm=1001.2014.3001.5501

- CLRNet: Cross Layer Refinement Network for Lane Detection

发表(录用):CVPR 2022

单位:飞布科技(Fabu)、浙江大学

论文:https://arxiv.org/pdf/2203.10350.pdf

代码:https://github.com/Turoad/CLRNet

论文解读及效果demo:(点击进入)

新的车道线检测SOTA CLRNet: Cross Layer Refinement Network for Lane Detection CVPR2022

https://blog.csdn.net/qq_41590635/article/details/125136528

- A Keypoint-based Global Association Network for Lane Detection

发表(录用):CVPR 2022

单位:北大、中科大、商汤

论文:https://arxiv.org/pdf/2204.07335.pdf

代码:https://github.com/Wolfwjs/GANet

提出全局关联网络(GANet)从新的角度描述车道检测问题,每个关键点直接回到车道线的起点,而不是逐步扩展。

具体地说,关键点与其车道线的关联是通过预测它们在整体范围内与相应车道起点的偏移来实现的,而不是相互依赖,这可以并行进行,从而大大提高效率。另外,还有,聚合器具有车道感知特征(LFA),它适应地捕获相邻关键点之间的局部相关性,以补充局部相关信息。在高FPS的同时,在CULane上的F1分数为79.63%,Tusimple数据集上的F1分数为97.71%。

效果demo:

- Eigenlanes: Data-Driven Lane Descriptors for Structurally Diverse Lanes github SDLane Dataset

发表(录用):CVPR 2022

单位:Korea University, dot.ai

论文:https://arxiv.org/abs/2203.15302

代码:https://github.com/dongkwonjin/Eigenlanes

效果demo:

- Towards Driving-Oriented Metric for Lane Detection Models

发表(录用):CVPR 2022

单位:University of California

论文:https://arxiv.org/abs/2203.16851

代码:https://github.com/ASGuard-UCI/ld-metric

design 2 new driving-oriented metrics for lane detection: End-to-End Lateral Deviation metric (E2E-LD) is directly formulated based on the requirements of autonomous driving, a core downstream task of lane detection; Per-frame Simulated Lateral Deviation metric (PSLD) is a lightweight surrogate metric of E2E-LD.

- ONCE-3DLanes: Building Monocular 3D Lane Detection Homepage github Dataset

发表(录用):CVPR 2022

单位:复旦大学、华为诺亚实验室

论文:https://arxiv.org/pdf/2205.00301.pdf

代码:https://github.com/once-3dlanes/once_3dlanes_benchmark

the largest real-world lane detection dataset published up to now, containing more complex road scenarios with various weather conditions, different lighting conditions as well as a variety of geographical locations.(最大)

效果demo:

-

PersFormer: 3D Lane Detection via Perspective Transformer and the OpenLane Benchmark OpenLane Dataset

发表(录用):暂时未知

单位:Shanghai AI Laboratory, Purdue University, Carnegie Mellon University, SenseTime Research, Shanghai Jiao Tong University

论文:https://arxiv.org/abs/2203.11089

代码:https://github.com/OpenPerceptionX/PersFormer_3DLane

the first real-world, large-scale 3D lane dataset and corresponding benchmark, OpenLane, to support research into the problem.(第一个)

效果demo:

- HybridNets: End-to-End Perception Network

发表(录用):暂时未知

单位:Shanghai AI Laboratory, Purdue University, Carnegie Mellon University, SenseTime Research, Shanghai Jiao Tong University

论文:https://arxiv.org/ftp/arxiv/papers/2203/2203.09035.pdf

代码:https://github.com/datvuthanh/HybridNets

效果demo:

端到端的多任务感知网络HybridNet,性能优于YOLOP

https://blog.csdn.net/qq_41590635/article/details/125070914

欢迎对车道线检测、车位检测、目标跟踪、目标检测、目标分类、语义分割、深度估计等计算机视觉任务及自动驾驶技术(感知、融合、规控、定位、建图、传感器、嵌入式移植等)感兴趣的朋友、同行,加入技术交流群4群,一起学习,一起玩!